Opening note addressed to Forum participants by April Chin, Co-CEO of Resaro.

You say either, I say either

You say neither and I say neither

Either, either, neither, neither

Let's call the whole thing off, yes

You like potato and I like potato

You like tomato and I like tomato

Potato, potahto, tomato, tomahto

Let's call the whole thing off

—‘Let’s call the whole thing off’, by Ella Fitzgerald

5 years ago, I worked on my first AI testing framework with data scientists and engineers. The goal was inspiring — to make sure that AI governance frameworks can be executable. But the process felt exactly like this song. It was hard to bridge the worlds of AI governance and testing.

You want interpretable ML and my ML is interpretable. But you say it’s not interpretable. So what is interpretable? And why can’t you interpret it? Well, why can’t you make it more interpretable?

5 years on, I’m even more convinced that a potato is a potato no matter how you pronounce it. We share the same desire for innovative and trustworthy AI. But the reality is our paradigms and systems today are not designed to effectively achieve this outcome.

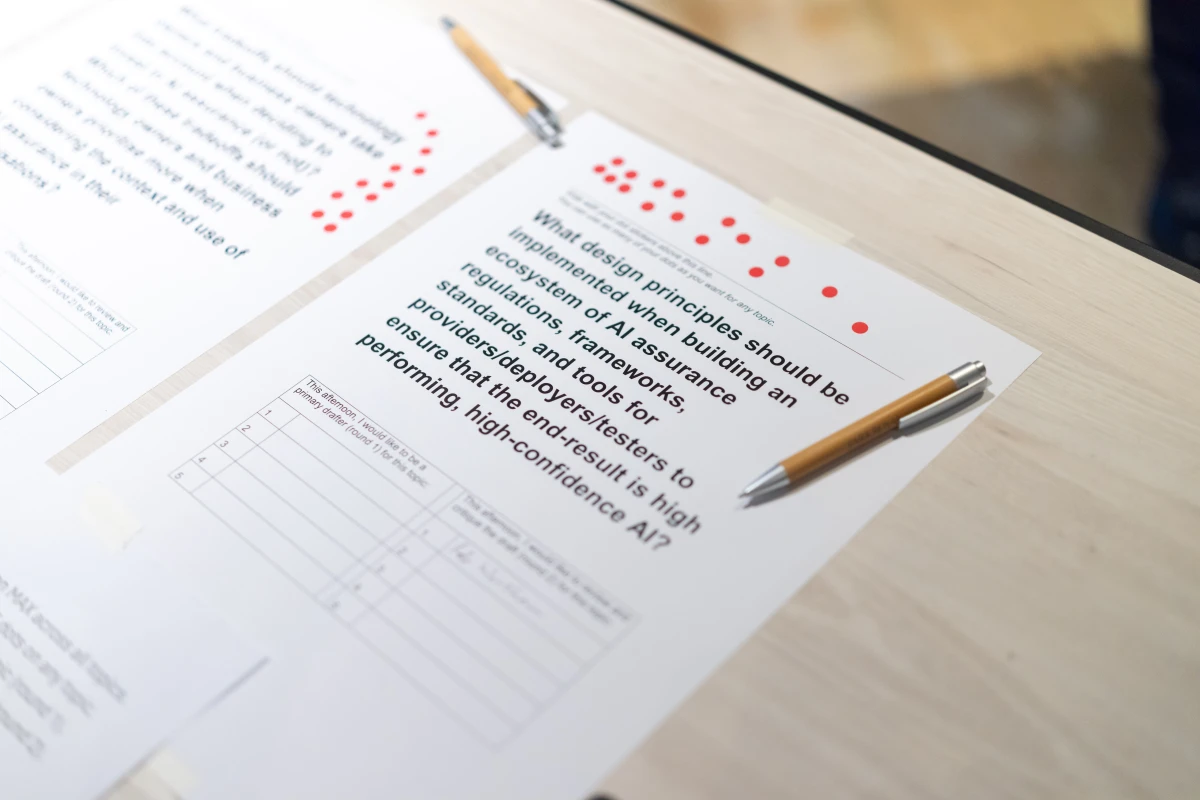

And that’s why we’ve organised the first-ever AI Assurance Forum that assembles a cross-section of the global AI leadership in government, industry, academia, and civil society — from backgrounds in engineering, business, economics, physics, social sciences and more. The diversity is intentional. To enable innovative and trustworthy AI, we need AI assurance to bridge the worlds of technical, business and governance.

AI assurance refers to the techniques, processes and practices aimed at providing confidence that AI components or AI-enabled systems function as intended. AI assurance seeks to minimise the risk of producing unintended and undesirable outcomes, and develop confidence in the operations of AI systems.

We deliberately designed the Forum to be a space for honest, sometimes uncomfortable, but ultimately constructive conversations. It was a space where each person was invited not to perform or persuade, but to surface what matters most — even if it's under-discussed, inconvenient, or unresolved.

I am deeply grateful for our partners and Forum participants for taking the leap to invest their time into this process of emergence. The discovery of not just where we align, but understanding why we diverge — on our values, priorities, assumptions and risk appetites, is critical for building clarity for action, especially at this juncture of accelerating AI innovation and adoption.

In this Report, we have distilled our insights, not as a consensus document, but a synthesis of the AI Assurance themes, patterns and tensions that emerged from the Forum. Our hope is this will serve as a foundational reference for the way forward in enabling Assurance for Rapid AI Adoption that will create lasting value.

Download the full Report here: https://resaro.ai/insights/whitepapers/temasek-x-resaro-ai-assurance-forum-report